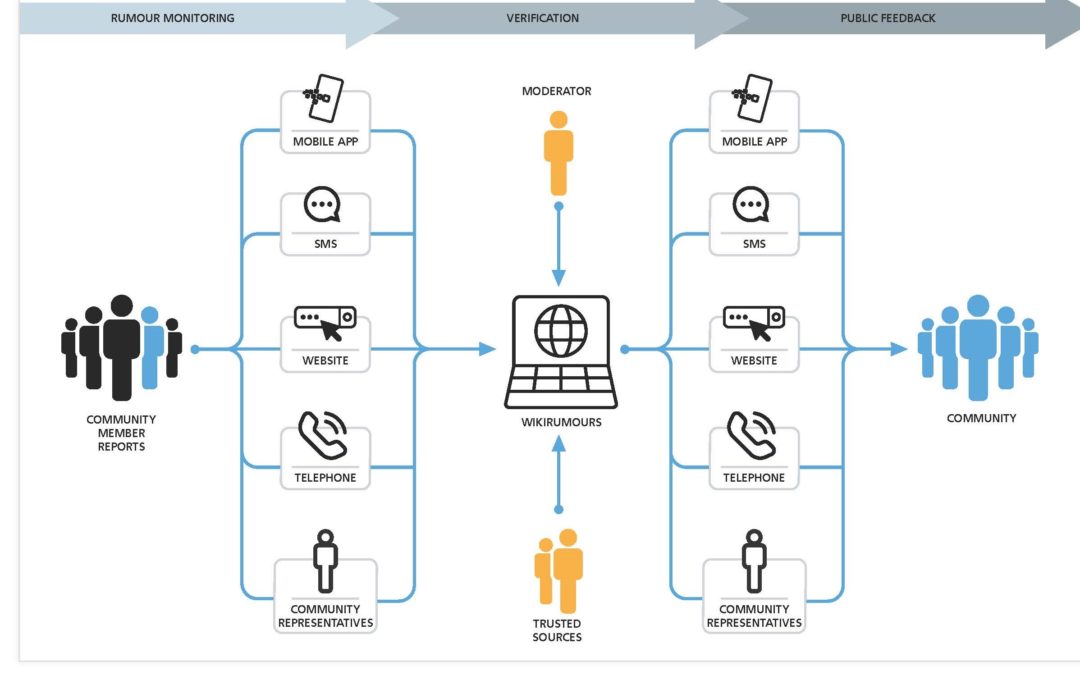

This infographic outlines the general misinformation management process that we use across our projects.

Blog #1: How are misinformation, hate speech, and violence linked and what are their consequences?

The Sentinel Project has spent a lot of time thinking about the relationship between misinformation, hate speech, and mass atrocities. This has become an increasingly salient topic due to a dramatic increase in the prevalence of misinformation worldwide, mainly as it proliferates online through various social media channels. While misinformation and hate speech leading to violence is nothing new, their potential to cause harm in an increasingly digitized and interconnected world is unprecedented. However, we need to do more to understand the relationship between misinformation and hate speech, which is often talked about completely separately. While they are indeed discrete phenomena, there is also a close relationship between misinformation and hate speech and they often overlap in practice, with many examples of harmful content containing both false information and hateful rhetoric.

This first blog post in our series looks at examples of misinformation leading to violence and then discusses the relationship between hate speech and violence. Better understanding these examples will then inform a conversation of how to prevent online content from leading to offline violence.

Misinformation and violence

The links between misinformation and violence are starting to be better understood. We define misinformation as an umbrella term that captures both “when false information is shared, but no harm is meant” (Wardle & Hossein, 2017) and disinformation, which is a type of misinformation that is spread intentionally, typically in order to achieve some type of goal.

There are a number of instances of misinformation leading to violence, with many of these examples being found in the Global South. They range from cases targeting individuals to those which involve intercommunal violence. In one case from 2018, Ricardo and Alberto Flores were burned to death in Acatlán, Mexico after a mob of people mistakenly identified them as child abductors after rumours about such criminals circulated on WhatsApp. In another example, a BBC report explains how misinformation about unrest caused by armed robbers in Ogun, Nigeria caused people to panic and prepare for violence. Rumours spread on Twitter, with the hashtags #OgunUnrest and #LagosUnrest. However, as the article notes, the police did not find any evidence of armed robbery, pointing to the explosive consequences of misinformation that had no basis in reality. Another BBC report describes how in 2018, a mob in India attacked three men who were visiting relatives in Handikera. After a message had been circulated in a WhatsApp group about potential child abductors, the men became targets and one of them was killed. Further BBC reporting describes lynchings that occurred throughout India in 2018, which were also linked to misinformation that had circulated on WhatsApp.

Other instances are found in the Global North. For example, in April 2019 police arrested 20 people accused of attacking Roma people in Parisian suburbs. Related to the COVID-19 pandemic, in February 2020, a BBC article asserted that Chinese tourists in Ukraine were attacked by local people who believed that they had COVID-19.

Hate speech and violence

While the attention paid to misinformation and its impacts is still relatively new, there are ample examples of links between hate speech and violence. Mondal, Silva, and Benevenuto (2008) identify hate speech “as an offensive post, motivated, in whole or in part, by the writer’s bias against an aspect of a group of people” (p.87). The United Nations strategy and plan of action on hate speech defined the term as:

any kind of communication in speech, writing or behaviour, that attacks or uses pejorative or discriminatory language with reference to a person or a group on the basis of who they are, in other words, based on their religion, ethnicity, nationality, race, colour, descent, gender or another identity factor.

The links between hate speech and violence are a bit more established in the literature and on the ground. For example, related to political figures, Piazza (2020), states that “countries where politicians frequently weave hate speech into their political rhetoric subsequently experience more domestic terrorism. A lot more.” He continues, explaining that “what public figures say can bring people together, or divide them. How politicians talk affects how people behave – and the amount of violence their nations experience.” Similarly, Williams (2019) finds that “online hate victimization is part of a wider process of harm that can begin on social media and then migrate to the physical world.” He concludes that:

What we do know is that social media is now part of the formula of hate crime. A hate crime is a process, not a discrete act, with victimisation ranging from hate speech through to violent attacks. This process is set in a geographical, social, historical, and political context.

A study by the American Bar Association (2019) found that “systematic, state-aligned campaigns to denigrate and indirectly threaten human rights defenders and marginalized communities contribute to a climate of violence and impunity that increases the risk of real-world violence against human rights defenders.” Beyond these research examples that demonstrate the relationship between hate speech and physical violence, on-the-ground examples are even more abundant. In Nigeria, Ezeibe (2020) finds that “an entrenched culture of hate speech is an oft-neglected major driver of election violence.” In his focus group results, participants discussed the perspectives that:

- Hate speech fuels intimidation and physical attacks on voters

- Hate speech leads to post-election violence

- Hate speech induces election violence targeted at members of other ethnic groups

- Hate speech facilitates violence during political rallies and conventions

- Hate speech promotes attacks on election materials and officials

- Attacks induced by hate speech provoke retaliatory attacks

- Hate speech encourages political assassinations

Similarly, Rights for Peace (2021) finds that “Sudan is seeing an escalation of violence characterised by clashes between ethnic groups, often ignited by instances of hate speech and incitement to violence.”

In Kenya, during its 2007 presidential elections, vernacular radio stations were found to play a role in spreading hate speech. As Somerville (2011) writes, radio stations “periodically broadcast hate speech about perceived opponents from other communities, at times appeared to condone or even incite violence of the expulsion of people from particular areas, and demonstrated considerable partisanship.” These examples, among notable others such as the well-known 1994 Rwandan genocide, point to the prevalent links between hate speech and violence.

Ways forward?

It is clear that there is a growing global problem related to misinformation, hate speech, and physical violence. However, formulating appropriate responses is challenging, especially when governments are involved, since policy recommendations related to influencing speech require striking a balance between regulation and fundamental rights and freedoms. They are particularly thorny when discussing misinformation on its own. First, due to the problem’s multifaceted nature, there is no universal remedy that will solve the growing problem of misinformation. Any universal policy recommendation is problematic due to the high level of variation between jurisdictions in terms of societal norms and political conditions. Second, managing misinformation via policy and law introduces a tension between the desire to reduce the prevalence of the dangerous phenomenon and the need to preserve freedom of expression. Even if the authority to regulate disinformation is not exploited, due to the unintended effects on civil liberties, it is a potentially slippery slope that would be difficult to backtrack. There are genuinely important questions about whether or not governments should be playing a central role in this sector.

As we know from our experiences with COVID-19 public health measures and regulations, observers may react strongly when they hear arguments based on freedom of expression, and freedoms in general. These types of arguments may seem like a defence of extremism, with advocates caring more about freedom of expression than about preventing violence. However, the Sentinel Project’s experience has shown that misinformation management is not a clear-cut exercise. While many government efforts to counter misinformation may be sincerely intended to serve the public interest and protect those who are targeted, this is not a problem that can be easily regulated. Any new government regulation should be considered against the loss of digital freedom. This question is relevant in all countries, including liberal democracies, but becomes even more salient in countries that lack strong protections for freedom of expression as well as other freedoms. There have already been numerous examples worldwide of governments developing strict legal penalties for disseminating misinformation, such as in Ethiopia, Nigeria, Malaysia, Singapore, Philippines, and India, and all of these laws leave ample room for government overreach. Thus, one must heed caution about government action since regulation might undermine democratic ideals, whether intentionally or unintentionally. While some of these efforts may be well-intentioned attempts to counter harmful misinformation, others are almost certainly attempts by authoritarian regimes to stifle dissent and control public discourse.

It is therefore important to not view governments as inherently neutral parties. Governments of all types – including liberal democracies – often spread misinformation for their strategic interests. For instance, Freedom House studies digital authoritarianism and assesses the degrees of digital freedom in various countries, an exercise that found digital freedom to be declining during the most recent reporting period. Freedom House authors, Adrian Shahbaz and Allie Funk, write that “For the tenth consecutive year, users have experienced an overall deterioration in their rights, and the phenomenon is contributing to a broader crisis for democracy worldwide.” Although liberal democracies may not engage in disseminating disinformation to serve their own interests as often or as explicitly as authoritarian states, governments will generally act in self-serving ways.

This point does not mean that governments should do nothing in response to misinformation and there is a wide range of opportunities and channels beyond legislation to lessen its impact. For example, we recommend that governments continue to fund media literacy campaigns. By pointing out the harms of misinformation, citizens can make their own decisions about what to believe on the Internet. This trickiness also highlights the need for programming such as the Sentinel Project’s work in several countries since civil society actors also have a role to play in misinformation management. Governments can also require transparency from social media companies regarding topics of hate speech and the incitement of violence. When there is a clear link between misinformation and hate speech online and physical violence offline, social media companies should have speedy and transparent protocols in place.

To end, this first blog post in our series looked at examples of misinformation and hate speech leading to violence. The subsequent examples of solutions are starting points in a broader conversation about how to tackle the growing impacts of online proliferation of misinformation and hate speech while balancing other considerations. We hope that the series sparks more debate about how to move forward on this topic. Stay tuned for the next post!